Models for the Test Design

Model-based testing¶

There are a number of advantages to the use of model-based testing:

- It can help to improve the efficiency of the testing process by automating the generation of test cases.

- It can help to ensure that the tests cover a wide range of possible inputs and scenarios, increasing the likelihood of finding defects in the system.

- It can help reduce the cost of maintaining the test suite. Changes to the system can be reflected in the model, which can then be used to automatically generate updated test cases.

- It can help increase confidence in the quality of the system. Because the tests are derived from a model of the system's behavior, they are more likely to be comprehensive and relevant.

Model definition¶

Model-based testers use models to drive test design and analysis. They also take advantage of models for other test activities, such as the generation of test cases and the reporting of test results.

The ISTQB glossary of software testing terms defines Model-based testing as «Testing based on or involving models» .

Software specification vs. usage model¶

In general, a distinction is made between

- software specifications, which model the functional behavior of the SUT and

- usage models that model the usage behavior of the future users of the software.

Test cases, which are generated from a software specification are mostly used in the so-called component- or unit test. Usage models are applied for the generation of test cases for the system- and acceptance test, respectively.

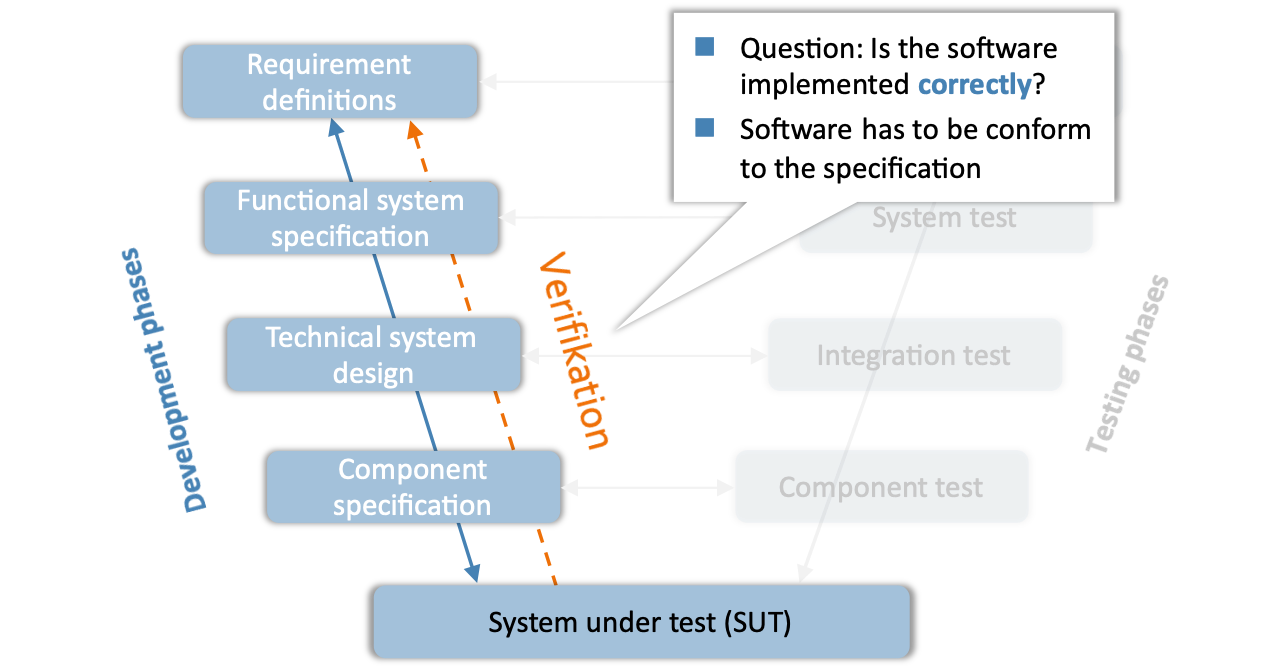

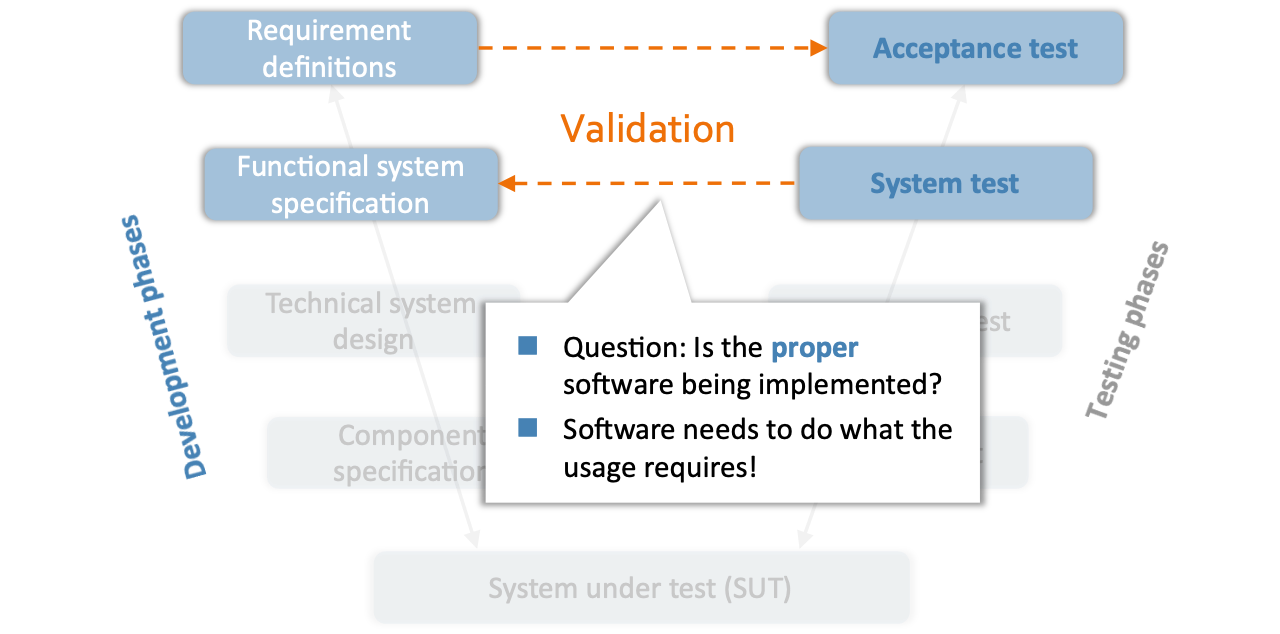

V-Model in the software development process¶

The widespread V Model clarifies this relation by

distinguishing between the development and the test of a

system, respectively software.

Verification vs. validation¶

Verification and validation are two important activities in the development and testing of software and systems. Both are concerned with ensuring that the software or system is of high quality and meets the requirements and specifications, but they focus on different aspects of the development process.

System or software verification is a more formal and mathematical approach. The main goal is to verify that the software has been implemented without errors and conforms to the functional specification. This process is limited to the left branch of the V-model and does not require usage models.

System or software validation, on the other hand, is a more experimental approach in which test cases are executed and result in more or less detailed test reports. The main goal is to show that the right software has been implemented with respect to the given requirements. This process has its focus on the right branch of the V-model and benefits from usage models.

Verifikation¶

- ≡ Verify that a piece of software is correct with respect to the required characteristics.

- Colloquially: "Has the software been built correctly?"

- in the V-model: checking whether the results of a development phase meet the requirements of the phase entry documents

- General properties (e.g. no program termination) and special properties (e.g. after a connection is established, the connection will always be closed)

- Informal approach (e.g. debugging, reviews,...)

- Formal approach (e.g. theorem proving, model checking, abstract interpretation, Hoare logic,...)

Validation¶

- ≡ Validate that the software meets your requirements.

- Colloquially: “Has the right software been developed?”

- in the V-model: Checking whether a particular result of the software meets the individual requirements for a specific intended usage

- Typically checked by a test

Test execution¶

- ≡ A systematic examination of a piece of software to gain confidence in the implementation of the requirements and to find the effects of errors (/b>it is not possible to prove the absence of errors!).